What Traditional Server Frameworks Like Node Can Learn From FaaS

How can Node or Java take inspiration from Lambda?

Benefits of FaaS

Functions as a Service come with many benefits:

A monolith can be hard to understand, maintain, take a long time for new engineers to ramp up on, and take a long time to start up. Instead, each Function is independent and pulls in only the code and dependencies needed for that endpoint, keeping it manageable.

Bugs in one Function don’t affect others, unlike with a monolith, where you can corrupt a global object.

State from Function doesn’t affect others, whether other endpoints or other concurrent executions of the same HTTP endpoint.

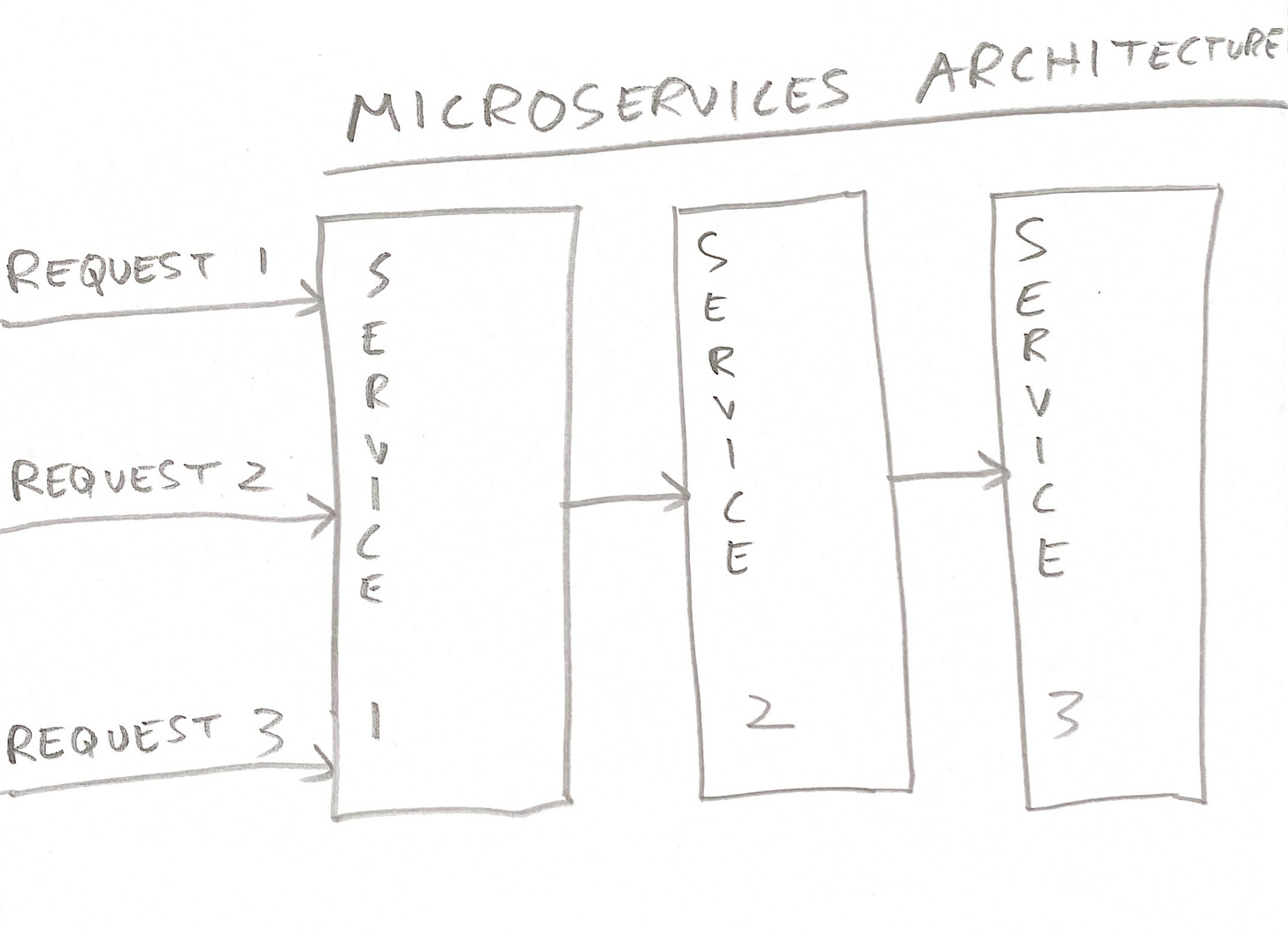

Functions are a better programming model than a traditional microservices architecture. Notice in this infographic how the slices are horizontal with FaaS rather than vertical with microservices:

FaaS is more secure since different Functions can run under different service accounts that each have the minimum privileges needed.

Drawbacks of FaaS

In exchange for all the aforementioned benefits, FaaS has its own downsides:

You’re locked in to Google or AWS and can’t move to a different cloud like Linode, which can be much cheaper. You can’t even move a Google Cloud Function to AWS or an AWS Lambda Function to Google! At least, not as easily as you can move a VM. If you’re operating at scale and find it cheaper to move off the cloud, you can’t. You can’t offer an on-premise service to customers to run in their own datacenters.

FaaS can suffer from cold start, making it unsuitable for user-facing requests.

FaaS costs 6x as much as VMs. If you’re operating at scale, spending millions of dollars every month more for FaaS may not be worth the productivity benefits. These fees can make some businesses unprofitable, especially those with a low ARPU (like freemium) or heavy compute needs (the opposite of a CRUD app).

The Best of Both Worlds

What can traditional server frameworks like Node or Java learn from FaaS? How can they offer some of the benefits of FaaS to traditional servers running in a VM?

In this blog post, I’d like to discuss an idea I have to this end: sandboxing. Each HTTP request should run in a separate sandbox. These should be completely different logical entities. Each should have its own copy of global and static variables, so that you can have a global variable user that represents the currently logged in user. It won’t conflict between requests, each of which runs in its own sandbox. You will no longer have to worry about which data should be request-scoped.

Any carelessly written code running in the sandbox should not affect other sandboxes. Unsafe APIs should be removed. For example, we think of Java as not having pointers, but it does, via the sun.misc.Unsafe package. This lets you bypass the memory safety of Java. Another example is using reflection to bypass access control and access private variables of other classes. Such APIs that let code break out of the sandbox should be removed. Another example is stdin, which should be separate for each sandbox, so that they don’t interfere with each other. Another example is System.exit(), which terminates the VM. It should be modified to terminate only the sandbox. Taking a step back from these specific examples, any APIs that let you break out of the sandbox and affect other sandboxes should be removed or modified.

You should be able to limit the CPU, memory and disk space utilised by sandboxes.

These sandboxes should not be OS processes or Docker containers running a separate copy of the VM1— these consume a lot of memory. Spring takes 6 seconds to start which is unacceptable latency for each user request! A lot of time goes into context-switching, limiting the number of processes you can have, and so the number of requests you can serve.

Instead, sandboxes should be implemented within the VM. Erlang pioneered this concept, and some other modern VMs have it, too. For example, v8 has Isolates:

An isolate requires only 3 MB of memory and starts up in 5 ms. This doesn’t add noticeable latency to user requests. For example, I prefer to have a peak latency of 100ms, and this is impossible if each sandbox takes 100ms to start.

Traditional server frameworks like Node or Java should offer an option to run each HTTP request in a separate sandbox, to benefit from the advantages of FaaS. They should implement this by using the sandboxing features of the underlying VM, like Isolates in v8. VMs that don’t support isolates, like the JVM, should be enhanced to do so.

The advantage of sandboxes within a traditional server like Node or Java, as opposed to using Lambda or Google Cloud Functions, is that you no longer have a cold start problem. You can run on EC2, benefiting from 6x lower prices than Lambda. Your code is also open-source and portable, rather than locked in to a cloud provider. You can run on Linode, which can be even cheaper than EC2. Or your company’s datacenter.

With sandboxed requests, you won’t have to worry about concurrent requests stepping on each other’s toes.

Further, every HTTP request should execute in a new sandbox, rather than reusing a sandbox from before. AWS Lambda reuses idle lambdas, which can cause state from the previous invocation can leak out to the next. For example:

var is_admin = false

exports.handler = async function(event, context) {

if database.get_user_type(event.cookie) == "admin" {

is_admin = true

}

...

}This looks correct, right?

Wrong: once is_admin is set to true, because one request was made by an admin, it remains true for subsequent requests, even if they’re not admins, because it’s never reset to false. Do you see how easy it is to make mistakes when sandboxes are reused? So, let’s not reuse them. This makes sandboxing within an app framework like Node an even simpler programming model than Lambda.

Sandboxes could have a longer timeout than the 15 minute Lambda imposes. You should be able to set it to whatever you want.

Unlike Lambda, a sandbox should be allowed to continue running after returning a HTTP response. Imagine a video transcoding function that accepts a video upload, returns HTTP 200, and continues running in the background for a day to transcode the entire video. When it’s done, it exits 2. This makes sandboxing within an app framework like Node more flexible than Lambda.

You should be able to set a deadline for a sandbox. For example, if the load balancer waits only 1 minute for the backend to respond, there’s no point in giving the backend more than a minute to process the request, since the response won’t reach the client anyway. In such cases, you could set a deadline of 1 minute wall time for the sandbox. One company I know has a bug in their VM that, when triggered, causes the VM to take up 100% CPU till it’s restarted. This happens infrequently, but causes an outage when it does. With sandboxes, the unresponsive sandbox gets killed when its timeout expires, like in a minute, causing the outage to be limited to a minute rather than the whole day 3.

In summary, traditional server frameworks like Node or Java should think about introducing sandboxing as an option, to offer the benefits of the FaaS programming model to engineers who want them.

The language VM, like the JVM, not EC2 VMs.

Such as by returning from main() or calling System.exit().

There are alternative ways of limiting how much CPU a sandbox can use:

For one, you can limit CPU time instead of wall time.

For another, you can limit the number of vCPUs that can be used by a sandbox. If you limit it to 1, the sandbox can only only one hardware thread (hyperthread). Or run two hardware threads, each of which sleeps 50% of the time.